Antropy MD Paul Feakins Gets Certified in Machine Learning

What is Machine Learning?

I've just completed Andrew Ng's excellent Machine Learning course from Stanford University and while Machine Learning (ML) is not a new field in computer science, recent advances in computing power and an explosion in the amount of data available have made some unbelievable things possible!

Simply put, ML is where a machine can learn to produce meaningful output after being "trained" on a large volume of existing data. Rather than a "dumb" computer only understanding true or false, and every detail being programmed by a human, ML allows a computer to respond to images, sound and other types of input in ways that have previously been the reserve of humans.

Ng's agreeable, humble, friendly style helps you to stick with him over the more challenging topics but don't be fooled, he is one of the world's top machine learning experts having Founded Coursera.org, Co-Founded Google Brain, and headed up teams at some of the biggest tech companies including China's equivalent to Google, Baidu. You can tell he knows his stuff when a large section of his CV is devoted to his notable students, many of whom are big names in ML and AI themselves!

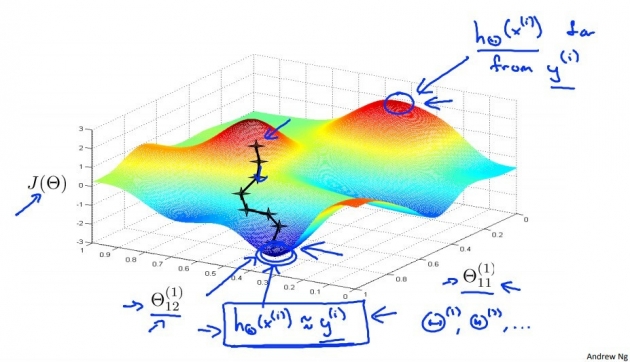

With that in mind it came as no surprise that the course was incredible - absolutely fascinating - but also relatively difficult in places as many of the algorithms are expressed only in formal maths notation. Indeed many of the programming assignments were to convert maths forumlas to Octave (or MATLAB) code.

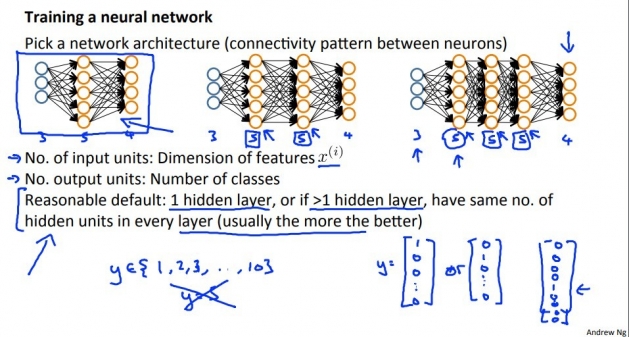

It really was worth the effort though as understanding how neural networks work, what they can do, their limits, how to build them, and why they require GPUs (Graphics Cards) are very likely going to be important in our work in the years ahead.

What was covered?

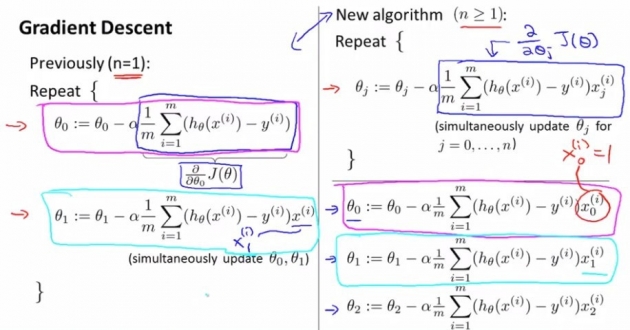

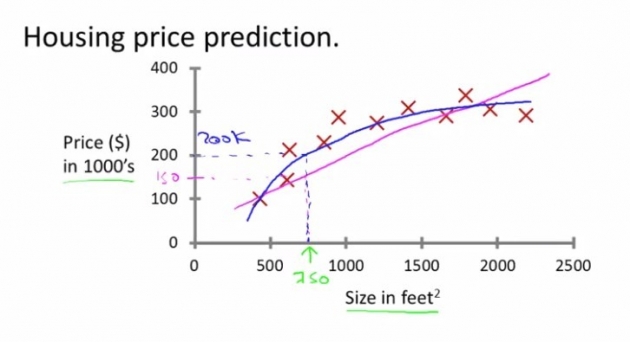

The course starts off with examples of how Machine Learning can predict the price of a house given a set of variables such as the square footage, number of rooms etc. by using Linear Regression and Gradient Descent. I was hoping to learn about AI and yet here I was back in A-level maths drawing graphs. By multiplying each variable with a number and then adding the results, it was possible to predict the house price.

It was new to me that when you multiply matrices (tables of numbers), rather than multiply each element with the corresponding element, you actually multiply columns with rows and sum the result to generate a new matrix. If you have a matrix of houses with their features (square feet etc.) and another matrix of "parameters" i.e. the numbers to multiply each feature by, matrix multiplication outputs a handy table of the predicted house price for each house.

Because matrix multiplication is used in computer graphics, GPUs are very very fast at this which is lucky because to get the correct parameters (train the system), the Gradient Descent algorithm has to try various numbers, check if it is getting closer or further away from the actual value and then try again with adjustments until it settles (converges) on an optimal set.

Neat trick, and very useful, but still not what comes to mind when I think of AI. The real fun begins though when the input features described above form the inputs to the first layer of a neural network. These input "neurons" could be the features of a house but they could also be pixels in an image, or any other input data that we'd like to work with. Instead of then outputing a house price, they will give a value to a "neuron" in the next layer of the network.

Each neuron has its own set of parameters (similar to dendrites in the brain) between it and all the neurons in the previous layer which are adjusted based on how succesful the network has been at classifying a particular input (or more specifically that neuron's contribution to how successful it has been via backpropagation but that's probably more detail than we need for now).

It turns out that GPUs that have been optimised for gaming are super-fast at matrix multiplication and that's very good news for neural networks because they require a lot of it while training, although much less once being used, so you can train a network on a very powerful GPU and then use the network on cheaper hardware.

The course gets more exiting from there with programming assignments and case studies that include classifying emails, reading text, anomaly detection, product recommender systems and many others, all using Octave or MATLAB.

How could it be used?

The technology now exists for computers to do many things that were not previously possible. Often a client will have had a requirement or feature request and the developer would have said something like "that's impossible, how can the computer know xyz". Many of these features are now possible and many have already been implemented. These advances have happened so fast that many clients and developers aren't aware of what can now be done relatively easily.

For example, in the UK you can apply for a passport online and submit a photo but there are strict guidelines about the photo. Already, ML is accepting and rejecting photos, only being deferred to a human if the user insists that a photo is being rejected unfairly.

There are many absolutely incredible examples of what neural networks can do today at the cutting edge of the research such as:

- Self-driving cars:

https://www.youtube.com/watch?v=cfRqNAhAe6c - Remove the background from images:

https://www.remove.bg/

https://clippingmagic.com/ - Make a video of anyone you choose saying anything or doing anything you want them to:

https://techcrunch.com/2016/03/18/this-system-instantly-edits-videos-to-make-it-look-like-youre-saying-something-youre-not/ - Generate photos of people who never existed:

https://petapixel.com/2018/12/17/these-portraits-were-made-by-ai-none-of-these-people-exist/ - Increase the resolution and quality of images:

https://petapixel.com/2017/10/31/website-uses-ai-enhance-photo-csi-style/ - Colourize black and white photos:

https://petapixel.com/2018/05/09/google-photos-to-use-ai-to-colorize-black-and-white-photos/ - Create a realistic photo from a very rough sketch:

https://affinelayer.com/pixsrv/index.html - Create a photo from a text description:

http://digg.com/2018/text-to-image-generator-ai-cris-valenzuela - Lip read from video:

https://arxiv.org/abs/1611.01599

Andrew Ng (pronounced "Eng") says in many of his talks that ML is now ready to be used in all sorts of applications and indeed it's very likely that you use ML every day already if you use Google Home, Amazon's Alexa, Facebook, Snapchat or many other online services.

So what features can easily and cheaply be built right now on to your website or in to your business that were previously impossible? Here are some ideas:

- Present the products and offers that are most likely to appeal to the customer.

- Automatically detect spammy product reviews.

- Automatically add products to relevant categories.

- Automatically detect unusual online orders and ask the customer if they're sure.

- Pop up a chat window or help when a customer seems to be stuck.

- Check that any user generated content is correct and meets guidelines.

- Allow voice searches for products and questions very easily.

- Add a chatbot (although these aren't currently very good and won't be until we're close to true AI which is likely 10 years away according to Ray Kurzweil).

Also:

- Run ideas past us - we'll now be able to say with confidence what is easily possible in ML and what is not!

What's next?

It seems likely to me that ML functionality will start to be added to software libraries and start to become part of every developer's standard toolkit. The rate of progress in this field in the last year is incredible - almost every month an amazing new neural network appears online doing something that has previously been considered impossible such as upscaling or colourizing images. Things in this field are starting to get very interesting indeed!

What did you think? Do you have an idea for an ML project? Let us know below!

blog comments powered by Disqus