[Case Study] The Good Care Group Speed Improvements

About the Site

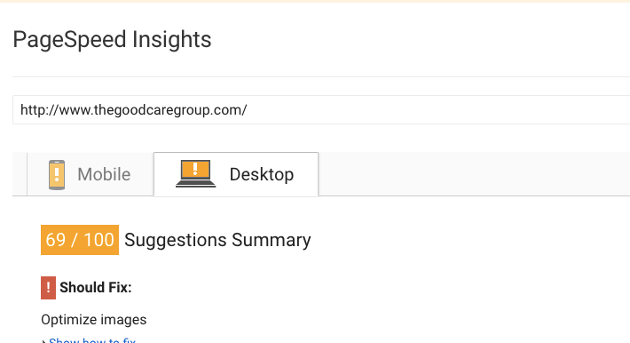

The Good Care Group is a UK national company specialising in live-in care for the elderly and disabled. Their website was built using the Concrete5 CMS and we host the site on its own SSD VPS. Unfortunately they weren't scroring highly on Google Page Insights.

I was given the task of increasing the score above 80 (into the green) on both desktop and mobile - the main reason for this was to increase the page ranking of the site. Below I’ve given a brief overview of the steps I took to boost the site speed significantly.

N.B. Although this was a Concrete5 site, most of these changes can be applied to any site without too much trouble (although some of the server config modifications may not be possible on cheaper hosting packages).

Enable GZIP compression

Enabling Gzip compression means that files will be compressed on the server and then de-compressed in the browser once they have been received. This was a nice and easy first step, I simply added the following to the htaccess file:

AddOutputFilterByType DEFLATE text/html AddOutputFilterByType DEFLATE text/css AddOutputFilterByType DEFLATE text/javascript AddOutputFilterByType DEFLATE text/xml AddOutputFilterByType DEFLATE text/plain AddOutputFilterByType DEFLATE image/x-icon AddOutputFilterByType DEFLATE image/svg+xml AddOutputFilterByType DEFLATE application/rss+xml AddOutputFilterByType DEFLATE application/javascript AddOutputFilterByType DEFLATE application/x-javascript AddOutputFilterByType DEFLATE application/xml AddOutputFilterByType DEFLATE application/xhtml+xml AddOutputFilterByType DEFLATE application/x-font AddOutputFilterByType DEFLATE application/x-font-truetype AddOutputFilterByType DEFLATE application/x-font-ttf AddOutputFilterByType DEFLATE application/x-font-otf AddOutputFilterByType DEFLATE application/x-font-opentype AddOutputFilterByType DEFLATE application/vnd.ms-fontobject AddOutputFilterByType DEFLATE font/ttf AddOutputFilterByType DEFLATE font/otf AddOutputFilterByType DEFLATE font/opentype BrowserMatch ^Mozilla/4 gzip-only-text/html BrowserMatch ^Mozilla/4\.0[678] no-gzip BrowserMatch \bMSIE !no-gzip !gzip-only-text/html

Leverage Browser Caching

Browser caching allows the user's browser to cache resources and tells them how long to cache each file type for. As with enabling compression, the caching rules for the files hosted on the server could be set by adding the following to the .htaccess file:

ExpiresActive On ExpiresByType image/jpg "access 1 year" ExpiresByType image/jpeg "access 1 year" ExpiresByType image/gif "access 1 year" ExpiresByType image/png "access 1 year" ExpiresByType text/css "access 1 month" ExpiresByType text/html "access 1 month" ExpiresByType application/pdf "access 1 month" ExpiresByType text/javascript "access 3 month" ExpiresByType text/x-javascript "access 3 month" ExpiresByType application/x-javascript "access 3 month" ExpiresByType application/javascript "access 3 month" ExpiresByType application/x-shockwave-flash "access 3 month" ExpiresByType image/x-icon "access 1 year" ExpiresDefault "access 3 month"

But what about external resources? These are files which are stored on another server, for example it's quite common to serve the javascript library jQuery from a Content Delivery Network (CDN). It is impossible to control the expiry headers for these external files.

Where possible we saved local copies of the files, this is fairly good practice anyway as you are reducing your dependancy on external systems which you do nor control. Some of these resources, however, were related to tracking e.g. Google’s own analytics.js (yes Google flags up it’s own scripts!), this raised another issue as these files are often updated and not designed to be hosted locally. Our solution was to create a script that checks the latest version of the resource and downloads it if it’s different (this runs once a day). While not ideal it does work and it gave us a few more page speed points!

Although the above script allowed me to clear the majority of the flagged resources there were some that couldn’t be stored on the server these were signal files sent periodically after page load by the live chat client and facebook tracking, unfortunately there was no way around this without removing the live chat feature (or finding a better chat client).

Reducing the Page Size

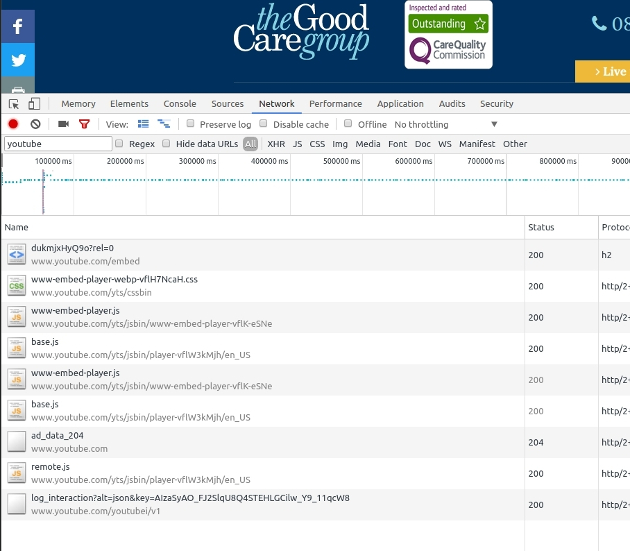

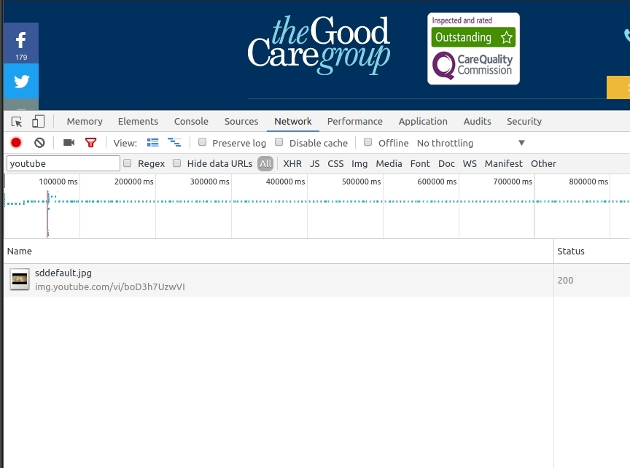

A lot of pages on the site contain youtube videos. Usually when you embed a video it will load in all the resources required to play the video everytime the page loads, this produces many unneccassary requests which can lead to a slow load (especially if the user never watches the video!). Luckily there is a good solution to this, instead of loading in the iframe and all the resources on page load, we only load 1 image (which is the video thumbnail, available from youtube). When the user then clicks on the image, the video is loaded.

This reduces the requests per videos from this:

To this:

So far we have only implemented this lazy-load on the home page but we will be converting all the youtube videos on the site in the near future

Optimize Images

Fortunately there wasn’t too much to do here as Concrete5 does a good job of compressing images that are uploaded. There were a few, however, I had to manually compress (including the mobile logo and some small png icons) a useful tool for this is: https://tinypng.com/.

Reduce Server Load Time

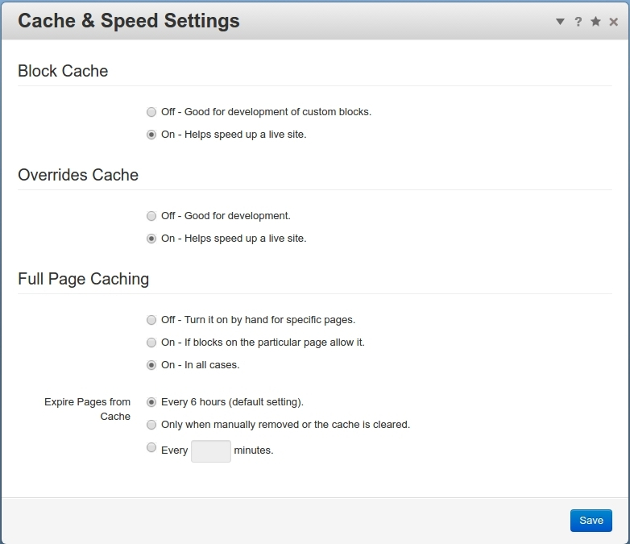

Google wants a TTFB (Time To First Byte) time of around 0.2s (this is the time it takes to make any DB queries and process any back-end logic in other words the time at which the server begins to render the page), this can be quite hard to achieve on CMS sites and especially on ecommerce sites (as they are so dynamic). Luckily, the Good Care Group doesn’t update frequently so I was able to take full advantage of the Concrete5 caching engine.

As you can see there are several options, for this site it was safe to switch on full caching, of course all sites are different and it can be worth playing around with the options to work out what works best.

At this point I hit a bit of a wall, I’d covered the obvious things and I was getting pretty good results (desktop occasionally got a green scrore). I didn’t really want to sink large amounts of time into restructuring the css/js to eliminate render blocking resources and prioritizing the above the fold content both of which were going to be long labourious tasks.

After a bit of research I discovered Google’s pagespeed module for Apache and nginx. This handles a wide array of server optimisations including (but not limited to): combining css/js, inlining css, prioritizing critical css, minifying html etc. It does a lot of heavy lifting and is the key to getting those illusive green scrores. Below are the specific rules I used:

ModPagespeedRewriteLevel CoreFilters ModPagespeedEnableFilters extend_cache ModPagespeedEnableFilters prioritize_critical_css ModPagespeedEnableFilters defer_javascript ModPagespeedEnableFilters sprite_images ModPagespeedEnableFilters inline_google_font_css,inline_css ModPagespeedEnableFilters convert_png_to_jpeg,convert_jpeg_to_webp ModPagespeedEnableFilters collapse_whitespace,remove_comments ModPagespeedEnableFilters rewrite_javascript,rewrite_css,lazyload_images ModPagespeedDisallow "*/script-checker/scripts/*.js"

Most of these are self explanatory, however the big one for me was ‘prioritize_critical_css’. This filter analyses the above the fold content and inlines the css necessary to render this section (this would have been a huge time consuming job to do manually). The module has lots of filters available which I won't go into but you can find out more here.

Conclusion

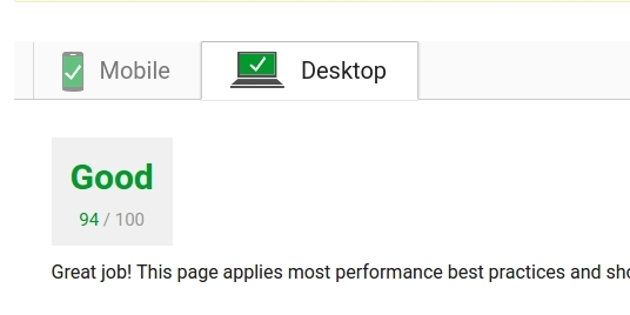

So in the end I was able to get the score up to the mid 90s on desktop:

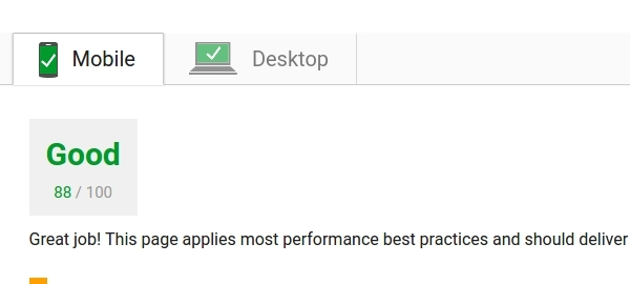

And high 80s on mobile:

As you can tell when browsing the site, it’s very quick. The main issue preventing me getting to 100 was the live-chat signals which are impossible to cache (also in my opinion, they shouldn’t be flagged by this tool). so reaching 100 wouldn't be possible without sacrificing features of the site.

blog comments powered by Disqus